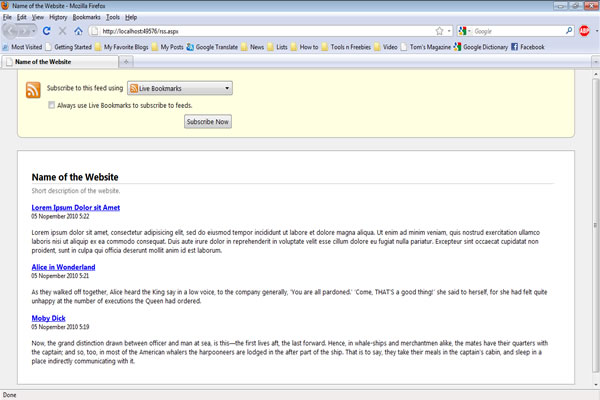

Page function gets executed at each of the pages - both Start-URL and pages matching Pseudo-URL.Then the scraper opens all of them one-by-one.It finds all the URLs matching our only Pseudo-URL, for example, and adds these URLs to the queue.To summarize what we have just configured: For more information on various inputs of Web Scraper, see its documentation. But to put together the page function, we will have to look more deeply into the HTML source code of the changelog page. We won't need any other configuration fields to accomplish our task. Page function - here the programmer fun starts 🎡.These are all in the form where stands for any series of characters. Pseudo-URLs - this is a pattern for URLs you want the scraper to visit.Start-URLs - simply enter the URL for the scraper to start at.Here we will have to configure three fields: Get a free Apify account if you haven't already got one, then open the scraper in Apify Console and create a task for it:Īfter you've created a new task, open the "Input and options" tab. We will be using our most popular generic scraper - Web Scraper (apify/web-scraper). Finding Your RSS Feed Link Adding the RSS Feed Section to your website Layout - Click the Change layout link to choose a different display style for the feed.

0 kommentar(er)

0 kommentar(er)